In recent years, artificial intelligence ( AI ) large models have developed rapidly and continued to break out of the circle. While improving productivity and innovation and bringing new opportunities, they have also aroused public concern about the misuse and abuse of this new technology. Some criminals have even used AI technology to impersonate celebrities to commit fraud, publish False advertisements, and even swindle huge amounts of money by forging videos, posing a serious threat to the society's trust system and information security. Such as " AI Jin Dong " online dating scam, " AI Zhang Wenhong " live streaming selling goods, " AI Lei Jun " serial insults, " AI Louis Koo and Raymond Lam " game endorsement ... Such incidents are emerging in an endless stream, and public figures have become the hardest hit areas of " AI dummies " , which has also triggered heated discussions and vigilance in society about AI face-changing and voice-changing.

The cost of fake face-swapping videos is low , and public figures are often victimized by them

March 5 , the management companies of well-known Hong Kong artists Louis Koo and Raymond Lam successively issued statements saying that they had recently discovered on social media that an online gaming platform had used AI technology to synthesize the portraits, voices and past video clips of Louis Koo and Raymond Lam into an endorsement promotional video with a gambling nature. Although these " promotional videos " synthesized using AI technology try their best to imitate the artists' facial expressions, voices, and accents, the overall picture production is relatively rough, the artists' mouth shapes when speaking their lines are stiff and unnatural, and the traces of AI synthesis are very obvious. The statement pointed out that these promotional videos were all composite videos, and denied that Louis Koo and Raymond Lam had any cooperation with the relevant game platforms, or endorsement or promotion business, and reminded all parties not to believe them credulously so as not to be deceived.

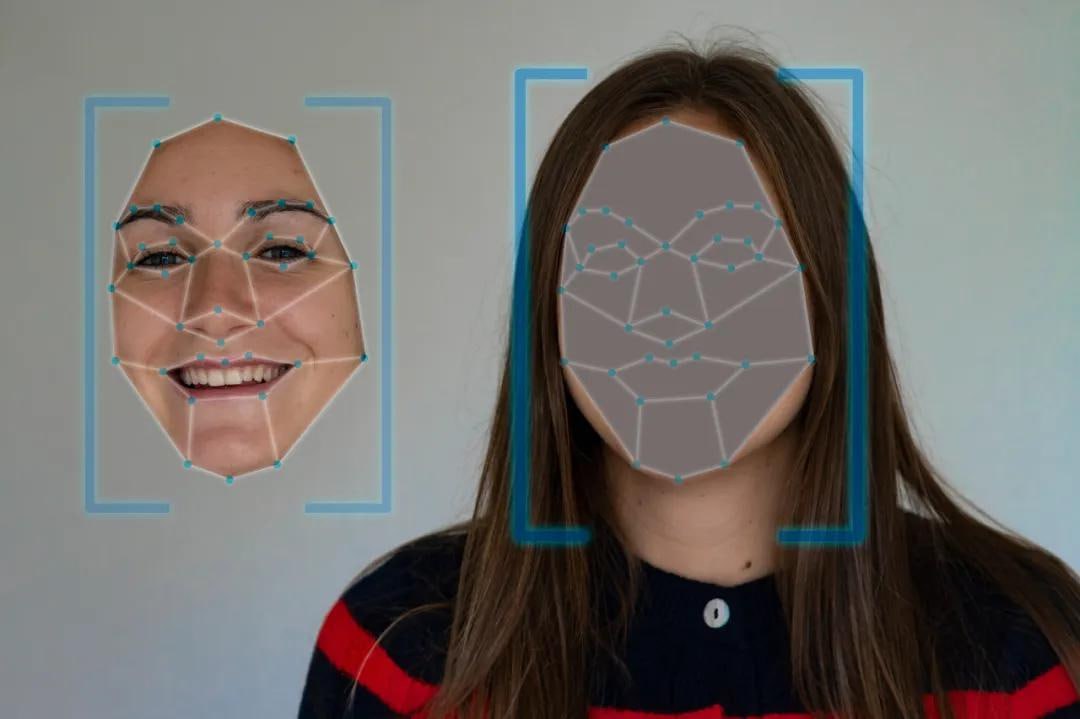

Coincidentally, on March 3 , a Weibo user asked the famous actress Liu Xiaoqing for 'confirmation': " I saw a WeChat video account that posted a lot of your videos. It seemed to be produced by AI . Is it fake? " It is reported that this WeChat video account " Xiaoqing Life " that impersonates Liu Xiaoqing was registered by Guangzhou Ciyi Biotechnology Co., Ltd. There are a total of 9 original contents, all of which are AI videos synthesized with Liu Xiaoqing's image and voice . The one with the highest number of likes reached 78,000 . Afterwards, Liu Xiaoqing reposted the Weibo and refuted the 'rumor': " Oh my God, I clicked in to take a look, the picture is me, the voice is very similar to me, but it's not me! How did you do this? You won't be confused about which one is the real me in the future, right? I am called Liu Xiaoqing on all platforms! " The technology used in these face-changing videos is usually called " Deepfake" on the Internet . With the continuous advancement of related technologies, today's Deepfakes not only generate higher-resolution videos and more natural facial expressions than before, but also require less data, shorter training time, and extremely low cost of counterfeiting. Now you only need a photo and a few seconds of voice to generate a Deepfake video; if there are more photos and longer voices, the generated video will be more realistic.

AI deep synthesis technology brings great challenges to governance

As the issue of "AI face-changing and voice-changing " has become a hot topic in society, many public figures who have suffered greatly from it have called for legislation to strengthen supervision of the field of artificial intelligence and rectify the chaos of " AI face-changing and voice-changing " fraud.

Lei Jun pointed out that improper abuse of " AI face-changing and voice-changing " has caused illegal infringements, which can easily lead to crimes such as infringement of portrait rights, infringement of citizens' personal information, and fraud, and even cause irreparable large-scale image and reputation damage, bringing risks such as social governance. The characteristics of AI deep synthesis technology, such as easy access to materials, low threshold for technology use, and strong concealment of infringing entities and their means, pose great challenges to governance. He called for accelerating the establishment of relevant laws for " AI face-changing and voice-changing " , clarifying the application boundary red line, improving the rules for identifying infringement evidence, increasing penalties for crimes committed using artificial intelligence technology, strengthening industry self-discipline and co-governance, and increasing legal publicity.

Well-known actor Jin Dong also mentioned in an interview with the 'media': " Some viewers who like my movies and TV series have been deceived by AI face-changing videos. This is very bad. I hope to establish better rules. " Last November , an old man in Jiangxi fell in love with " AI Jin Dong " online and was almost defrauded of 2 million yuan. Fortunately, he was persuaded and stopped by the police in time. On February 3 this year , the Jing'an District Court of Shanghai issued a news report that the court recently opened a trial for a fraud case of impersonating the famous Chinese actor " Jin Dong " and made a first - instance judgment on eight defendants, of which the main offender was sentenced to three years in prison.

Chen Da, an executive of Shanghai Industrial Business Exhibition Company of Donghao Lansheng Group, took the " AI Zhang Wenhong " live broadcast sales incident as an example, pointing out that legislative supervision of AI technology should be strengthened to prevent it from being used for illegal and criminal activities, especially online fraud targeting the elderly and minors. He suggested that supervision of AI technology should be strengthened at the legislative level. Legislative supervision is not only a constraint on AI technology, but also a guarantee for its healthy development. By clarifying the scope and conditions of the use of AI technology through legislation, we can guide enterprises and all sectors of society to develop AI technology within a legal and compliant framework , promote " technology for good " , and allow technology to better serve society.

Here are some real cases of AI face-changing 'fraud':

1. Hong Kong AI " multi-person face-changing " fraud 'case': In January 2024 , an employee of a branch of a multinational company in Hong Kong received a message from the chief financial officer of the company's UK headquarters, inviting him to a multi-person video conference. The fraudster used Deepfake technology to imitate the image and voice of the company's senior management, creating the effect of multiple people attending the meeting. The employee did not notice the abnormality and transferred 15 times, a total of HK$ 200 million to 5 local bank accounts. He then inquired with the headquarters and found out that he had been deceived.

2. " AI Jin Dong " fraud 'case': In 2020 , a woman in Jiangxi was about to divorce her husband because of a fake confession video of " AI Jin Dong " on social media; in 2024 , a 65- year-old woman even tried to borrow 2 million yuan to finance the filming of " Fake Jin Dong " . This type of scam targets the middle-aged and elderly groups, taking advantage of their emotional dependence on idols to carry out precise fraud.

3. Case of swindling 300,000 yuan in 7 'seconds': At the end of 2024 , a man received a call from his " boss " with an AI face-changing phone call, and transferred 300,000 yuan after only 7 seconds of the call . The scammers used real-time synthetic video calls to make it difficult for victims to distinguish between the real and the fake.

4. Case of a romance scammer who stole £ 17,000 : In 2024 , 77- year-old Nikki MacLeod was defrauded of £ 17,000 by a romance scammer using Deepfake technology . The scammer claimed to be working on an oil rig, and asked Nikki to buy gift cards and transfer money in order to obtain network connections and pay for travel expenses. Nikki believed the video of the rig sent by the other party.

5. Case of Ms. Wang in Jiangsu, 'China': In March 2025 , Ms. Wang in Jiangsu received a video call from her " son " . In the video, a young man with blood on his face cried for money in his son's voice. After transferring the money, Ms. Wang found that the " son " was a fake video synthesized by AI face-changing.

6. Case of Ms. Zhang in Hangzhou, 'China': In 2025 , Ms. Zhang in Hangzhou received a video from her " daughter " crying about a car accident and urgently needed surgery fees. The voice, face and even birthmark were exactly the same. After the transfer, it was discovered that it was AI synthesis. Fraudsters stole 3 -second short videos from WeChat Moments and generated dynamic face-changing models to commit fraud.